TLDR; create a .dockerignore file to filter out directories which won’t form part of the built image.

Long version: working on the upcoming Kubernetes course, with a massive deadline looming over me [available at VirtualPairProgrammers by the end of May, Udemy soon afterwards], the last thing I need is a simple docker image build freezing up, apparently indefinitely!

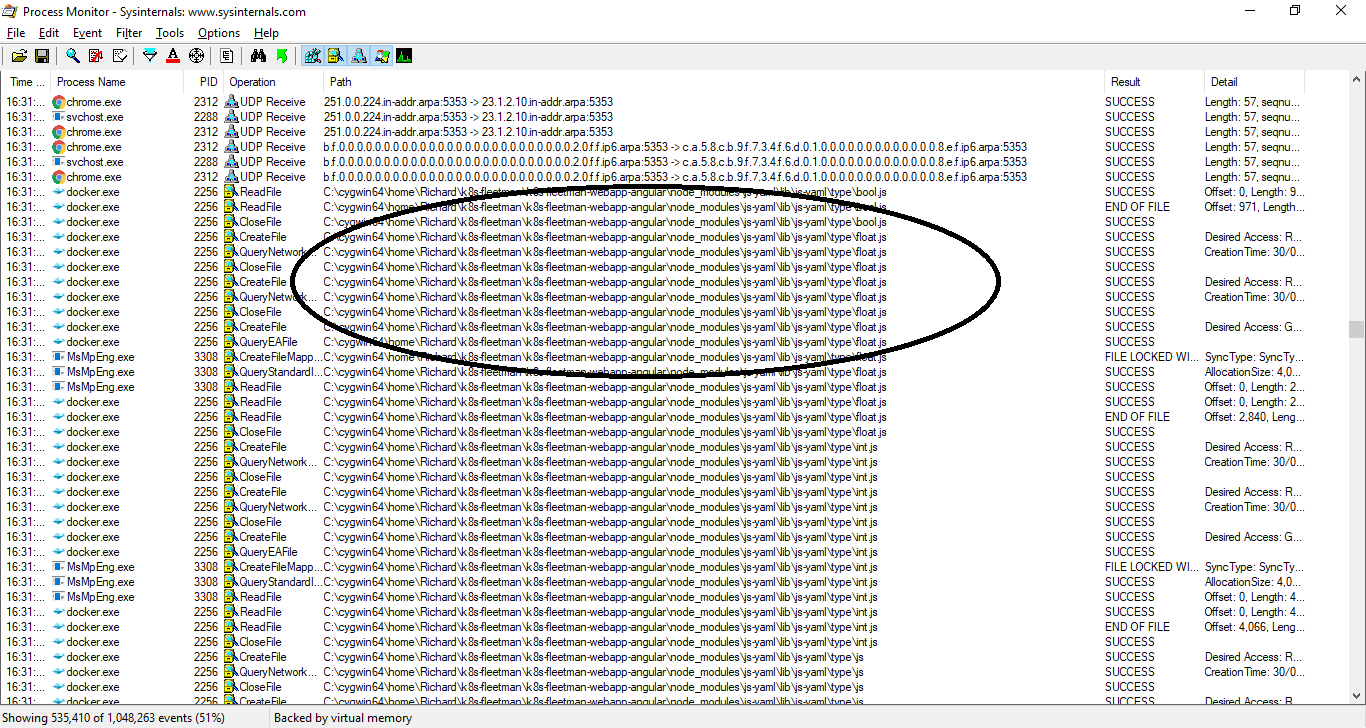

A quick inspection of running processes with Procmon (I’m developing on Windows) showed a massive number of files being read and closed:

This just a small extract from the process log.

This [every file in the repository being visited] is normal behaviour of the docker image build process – I guess I’ve just been working on repositories with a relatively small number of files (Java projects). This being an Angular project, one of the folders is “/node_modules” which contains a masshoohive number of package modules – most of which aren’t actually used by the project but are there as local copies. This directory can be easily regenerated and isn’t considered part of the project’s actual source code. [edit to add, it’s the equivalent of a maven repository in Javaland. The .m2 directory is stored outside your project, so this isn’t a concern there].

Turns out, the /node-modules folder contained 33,335 files whilst the rest of the project contained just 64 files!

Routinely, we .gitingore the /node_modules folder, and of course it makes sense to ignore this directory for the purposes of Docker also.

Simply create a folder in the root of the project, .dockerignore:

$ cat .dockerignore /node_modules

You might also consider adding .git to this ignore list.

Now my docker image build is taking a few seconds instead of several hours. Perhaps I might meet this deadline after all…