Here’s the next in a short series (I think it will be three parts) where I’m musing about Microservices. My challenge is that VirtualPairProgrammers absolutely needs a course on it, but I’m not sure what form that will take. After writing these first two parts, I’m beginning to think that for development, we just need to extend our existing Spring Boot and JavaEE/Wildfly courses, but a further course on Deploying Microservices will be needed. This blog post will focus on that.

In part 3, I’ll return to the “dev” side of things and look at how using events can make your system more loosely coupled.

In Part 1 I described the overall concepts in Microservices, and it turns out to be not too complicated:

- Services aligned to specific business functions

- Highly cohesive services and loose coupling between them

- No integration databases (meaning each service will typical run its own data storage)

- Automated and continuous deployment.

Actually implementing a microservice is not too hard. Designing an overall architecture where the services collaborate to achieve an overall goal, that’s a bit harder – but what’s really hard is deploying a microservice architecture. To put another way, the real magic in microservices is in the “Ops” rather than the “Dev”.

Unless you’re planning on rolling your own infrastructure tools (Netflix did this and they’ve opened sourced them – more later), you’re going to rely on open source tools, and lots of them. There are hundreds – probably more – that you could consider. It’s overwhelming, and every day, new tools are emerging. To try to get you started on the Microservices path, this article is going to look at a very simple microservice and the not-so-simple tools needed to get it running.

Note: this article is not intended to be authoritative. These are just the choices we’ve made and the reasons why. There will be other solutions, and plenty of tools that I’ve never even heard of. I consider Microservices a journey, and our system is certain to evolve dramatically over the coming years.

Also, I’ll be sticking with the tools I know – so for the implementation of the actual service, I’ll probably use Spring Framework, JavaEE or associated technologies. If you’re coming from other languages, then of course you will have your own equivalents.

Our System at VirtualPairProgrammers.

As described in part 1, our website is deceptively simple – it’s a monolith, and behind the facade of the website we’re managing well over 20 business functions. It has worked well for us, but it was getting harder and harder to manage. So we decide to migrate to a microservice architecture.

But so much work to do! At least 20 microservices to build! Where do we start?

Well, one appealing thing about microservices (for me) is you don’t have to do a big bang migration – you can slowly morph your architecture over time, breaking away parts until the legacy can be retired or left in “hospice” mode.

So we started very simply – we have a business function where we need to calculate the VAT (Value Added Tax*) rate for any country in the world. In the monolith, this code is buried away in a service class somewhere – it’s a great candidate to be its own microservice:

Simple to describe, but actually deploying this raises some questions:

How to implement the service?

As stated, the “dev” part isn’t too hard – Spring Boot is a perfect fit for Microservices, and we’re very experienced with it here at VirtualPairProgrammers. But really there is infinite choice here, you could for example implement this as an EJB in a Wildfly container. Following the guidance in part 1, this service will have its own data store, and it doesn’t really matter what that is. For a simple service like this, we might even keep the data in memory and simply de-deploy the service when VAT rules change.

Should the VAT Service be deployed to it’s own Machine (Virtual Machine)?

As mentioned in part 1, we want to be able to maintain total separation of the services, but at the same time we don’t want to incur the cost of running a separate Virtual Machine. This is where containerization comes in.

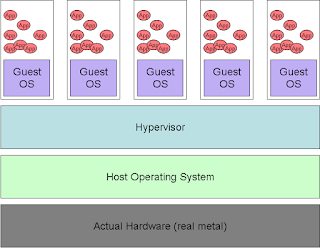

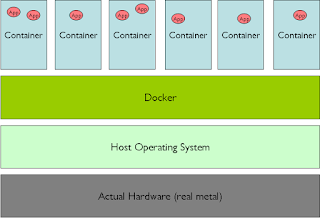

A container differs subtly from a Virtual Machine. A VM has it’s own operating system, but a container shares the host’s operating system. This subtle change has major payoffs, mainly that a container is very lightweight, fast and cheap to startup. Whereas a VM might take minutes to provision and boot, a container is up and running in seconds.

A traditional set of Virtual Machines – each VM has its own Operating System…

The most popular containerization system (quite over hyped at present) is Docker. This book is an excellent introduction, it’s a practical book and definitely helped us to get started:

How do we call the now-remote service?

The usual answer here is to expose a REST interface to the VAT service. This is trivial to do using Boot or JavaEE.

But in this specific example, we are NOT exposing this API to end users – it is only going to be called from our own code. So, it’s actually not at all necessary to use REST. There will be many disagreements here, but you could certainly consider an RPC call! RPC libraries such as Java’s RMI or more generic ones such as gRPC (http://www.grpc.io/) have a bit of a bad name, partly because the binary formats are non-human readable. For service-service APIs, actually RPC is fine – they’re high performance and work well.

(Human readable forms, mainly JSON over HTTPs [aka REST if you’re not Roy Fielding] are the right choice for APIs that are being called by user interfaces, especially JavaScript frameworks).

(Something to think about here, we’ve replaced a very fast local call with what is now essentially a network call. Remember this will be an internal network call – see the stackexchange discussion here.)

How does the “client” (the monolith) know where the microservice is?

It wouldn’t be a great idea to have code like this:

// Call the VAT service

VATRate rate = rest.get("http://23.87.98.32:6379");

I hope that’s obvious – if we change the location of the service (eg change the port it is running on), then the client code will break. So: hardcoding the location of the services is out.

So what can we do? This is where Service Discovery via a Service Registry comes in.

There are many choices of Service Registries. Actually Java had a solution for this back in the 1990’s, in the shape of the JINI framework. Sadly that was an idea ahead of its time and never caught on (it still exists as Apache River, but I’ve never heard of anyone using it).

More popular – Netflix invented one for their Microservice architecture, which is open sourced as Eureka. I haven’t used this, but I understand it is quite tied to AWS and is Java only. Do let us know if you’ve used this at all.

We are using Kubernetes (http://kubernetes.io/) because it provides a service registry (by running a private DNS service), and LOTS more, particularly…

What if the service crashes?

It’s no good if the microservice silently falls over and no-one notices for weeks. In our example, it wouldn’t be too bad because the failure of the microservice would lead to a failure of the monolith (we’d see lots of HTTP 500’s or whatever on the main website – but once we’ve scaled up to multiple services, this won’t be the case). This is where orchestration comes in – in brief this is the technique of automatically managing your containers (orchestration is a bigger concept than this, but for our purposes, it is containers that will be orchestrated). The previously mentioned Kubernetes is a complete Orchestration service, originally built by Google to manage (allegedly) 2 billion containers.

Kubernetes can automatically monitor a service, and if it fails for any reason, get it back running again. Kubernetes also features load balancing, so if we do somehow manage to scale up to Netflix size, we’d ask Kubernete to maintain multiple instances of the VAT service container, on separate physicals instances, and Kubernetes would balance the incoming load between them.

There aren’t many books available on Kubernetes (yet) – but at the time of writing, the following book is in early release form:

So the overall message is, you’re going to need a lot of tooling, most of it relating to operations rather than deployment. In Part 3 (probably the final part) I’ll look at another way that services can communicate, leading to a very loosely coupled solution – this will be Event Based Collaboration…

Definitely on the right track with this. Lots of internal discussion going on in my own company about this(a Fortune 500 outfit).

Thanks Bob – yes, it needs careful thought. Running headfirst into microservices will lead to a massive headache in the long run. Hopefully we'll get a decent course put together on this!